MLL Lab

Machine Learning and Language

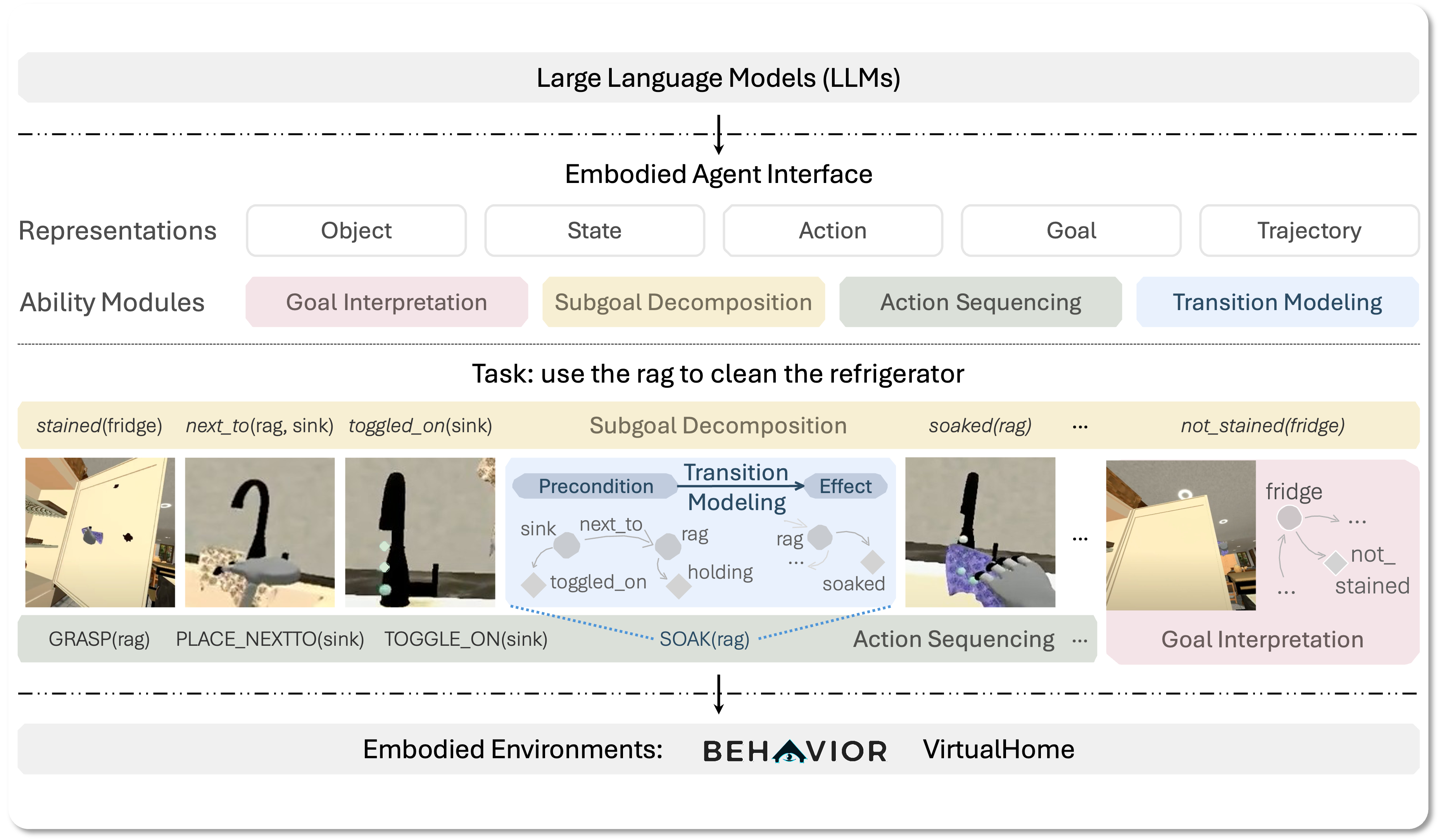

We develop intelligent language + X (vision, robotics, etc) models that reason, plan, and interact with the physical world.

📰 Latest News

March 2025 — Will organize the CVPR 2025 Workshop on Foundation Models for Embodied Agents, with Ruohan Zhang, Yunzhu Li, Jiayuan Mao and Wenlong Huang, Qineng Wang, Yonatan Bisk, Shenlong Wang, Fei-Fei Li and Jiajun Wu. Calling for papers!

December 2024 — Will organize the tutorial on Foundation Models for Embodied Agents at AAAI 2025 and NAACL 2025, with Yunzhu Li, Jiayuan Mao and Wenlong Huang.

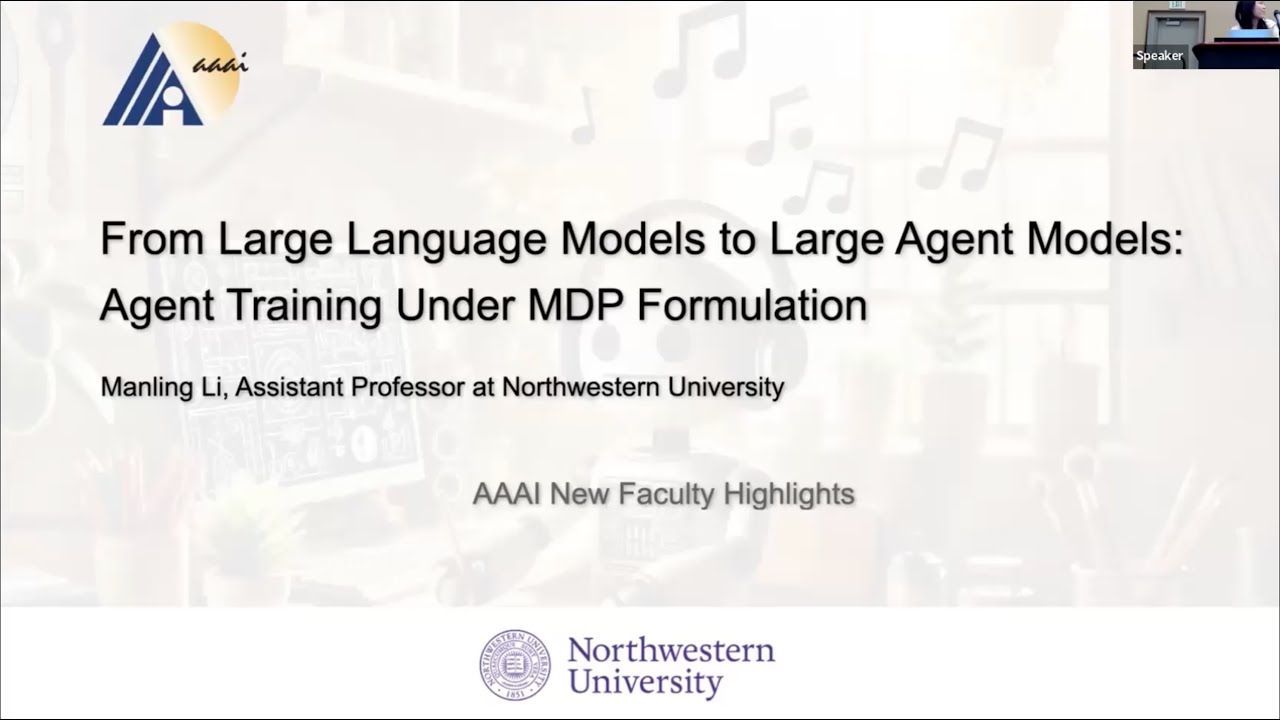

December 2024 — Prof. Manling Li is selected as AAAI 2025 New Faculty Highlights.

November 2024 — Our Embodied Agent Interface has been selected as Best Paper Award (top 0.4%) at SoCal NLP 2024!

October 2024 — Our Embodied Agent Interface has been selected as Oral Presentation (top 0.6% over D&B track, top 0.4% over all tracks) at NeurIPS 2024!